Use the Google SITE Command to Turbocharge Your Marketing Efforts

Updated March 18, 2019

Dial up your website profits by troubleshooting marketing problems using the Google site: command. Read on to learn how to find problems such as non-indexed pages and duplicate content penalties, see which of your pages are the most powerful (in Google’s eyes) and check specific sections of your site issues (and growth) such as your /blog/, /forum/, /wiki/ and more.

Site Command Fundamentals

Site Command Request

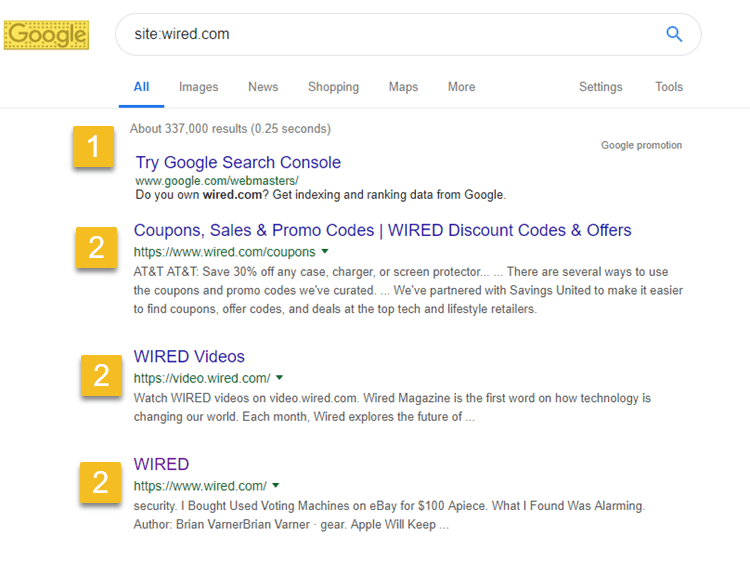

Go to https://www.google.com and click into the search box, inputting this:

site:wired.com

or

site:https://wired.com

[replace wired.com with your domain and exension or follow along using the wired.com example]

Site Command Result

Here’s what you’ll get as the result of the site command:

#1 – ‘About 337,000 results (0.25 seconds)’ is detailing roughly how many pages Google has spotted on the wired.com website.

#2 – The top 3 results (most powerful pages based on the Google algorithm). These are almost always the homepage and other key section home pages within a site.

Note that the actual results would continue well beyond the top 3, in fact giving a full page of results. Scroll to the bottom and you can paginate further into the site.

Site Command Pagination (Incomplete Result)

In the case of wired.com we’re expecting 337,000 page results. We go to the bottom of the page and look at the pagination showing 10+ pages:

Unfortunately, if we click 10, 15, 20, etc, we don’t go all the way up to page 33,700. In fact, we only go up to page 40! In this case, Google is only going to show us 393 of 337,00 results!

Segment the Site to Get More Results

Often, with sites bigger than a few hundred pages, you’ll have to segment the site command into chunks, in order to get more results. To do this, just add a directory to the end of the result. In the example of wired.com, their top navigation is broken down into categories, like Business, Culture and Gear, to name but a few.

In the case of Gear, it’s the name of a category, and clicking it takes you to the URL: https://www.wired.com/category/gear/

Using the site command to find out about the Gear section of the website, we simply type the following into the search bar:

site:https://www.wired.com/category/gear/

Instead of getting 393 out of 337,000, we’re now getting 393 plus an additional 297 page more data.

More Segmented Results

We can simply stop there, or if it was a big category, we could segment deeper and deeper, like /deals/specials/, /deals/electronics/ and so on. The key is to get as much data as possible that can be of use.

Turbocharge Your Marketing with Site Results

Finding Whether or Not There’s Dupe Content

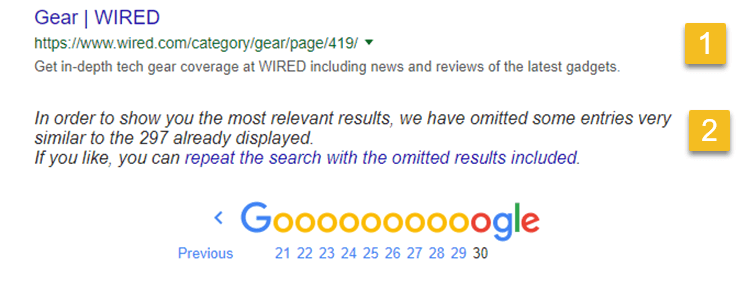

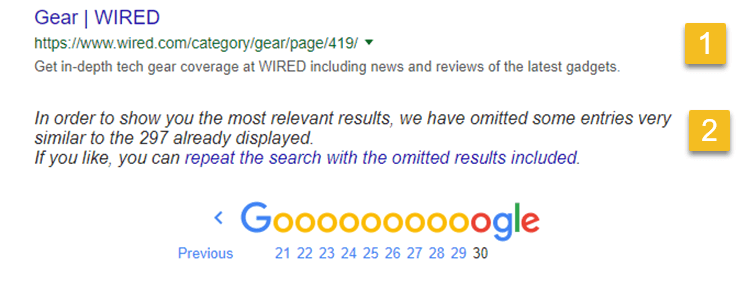

Continuing the Gear example, go to the bottom of the results and paginate to the end (Page 30), so that you see the last of the 297 results, which should look like this:

#1 – shows the last of 297 results, so you know you’re at the end

#2 – shows that in addition to the 297 shown (from the Google primary index), there are more entries blocked from showing up in search results because they are in the secondary (dupe content) index, that rarely appears to searchers.

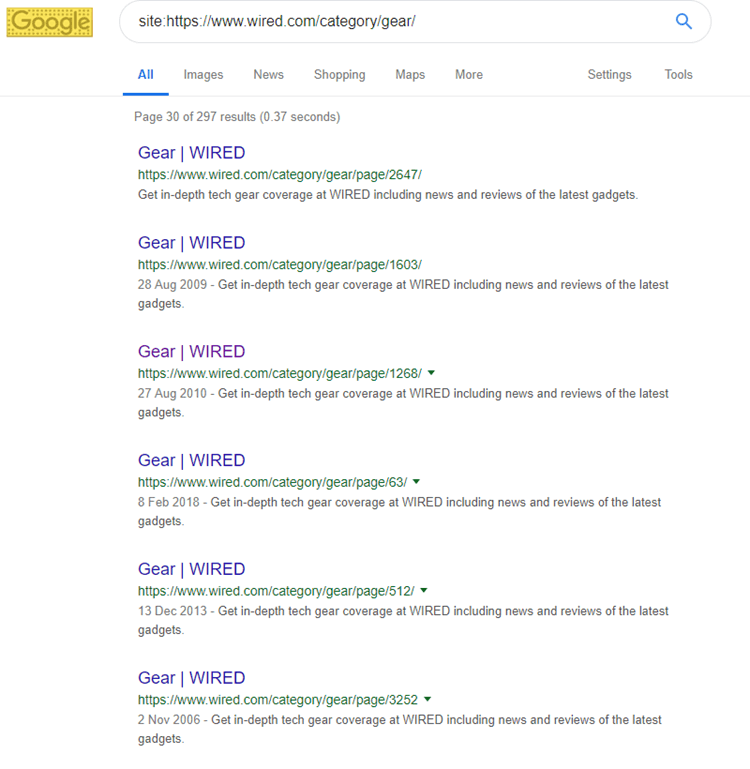

Finding Specific Dupe Content

To find out what is duplicate content, simply click on the link that says, “repeat the search with the omitted results included.”

Now, instead of 297 results, there are 492 results. That’s 297 in the main index, and 95 hidden in the secondary index because of problems. Skim through the results and you’ll spot the ones you hadn’t seen in the 297 results previously.

What’s in that dupe content index? Pages that are light on content or pages that only have content found elsewhere. Typically this includes category pages, tag pages, paginate content pages, or copy pasted text that’s been repeatedly used and only slightly modified.

In the case of wired.com/category/gear/ the dupe pages are paginated content on URL’s like:

https://www.wired.com/category/gear/page/1268/

Those pages are simply paginated listings of posts/pages that they’ve published separately. There’s nothing unique or original on any of those /page/ results, which is why most site owners don’t index them. Noindex flagging of your paginated results is one way of preventing Google from flagging large swaths of your content as being duplicate (poor quality). Quality matters!!!

Monitor Content Indexing

Check Indexed Page Counts

Refer back to the screenshot used originally for the /category/gear/:

In this scenario, 297 pages are indexed in the wired.com/category/gear/ – that’s ground zero – starting point.

After posting several new offers in the Gear category and promoted them, you want to make sure whether or not they’re getting indexed. Simply run the same site command, scroll to the end, and see how many pages are indexed. Is it 297 + X new posts? Or is it less?

Simply doing a bit of tracking counts of indexed pages and new posts added, you can find out how successful you are in getting posts/pages indexed.

Spot Title and Meta Description Uniqueness Issues

Site Command + Scroll

Run the site command and scroll down the page, looking at the results. If you want to make life easier, set your search preferences in Google to show 100 results per page instead of 10 – you’ll get a lot more to scroll through.

Simply by scrolling down a few times, you’ll quickly spot commonalities – like titles with repeating text, short titles, meta descriptions with repeating text, meta descriptions that are too short.

As you glance down the results, can you spot the duplication in titles and meta descriptions? They’re verbatim, top to bottom.

When you spot duplication, it either needs made unique, on a page by page basis, or it needs marked as noindex, so that Google doesn’t run quality control checks on it (and find the duplicated content and meta information).

Conclusion

Use the site command to monitor quality, find issues and then stamp them out. It’ll maximize your search results visibility, maximize your search rankings and maximize your profits.