Checking the Domain Access Logs for Abuse and Resource Usage

This article is going to be thoroughly detailed in covering the different methods of checking domain access logs; why you should check them and how to protect yourself from further incidents.

Remember; the goal is mitigation – reducing the impact your server experiences from such incidents – there is no method of 100% prevention.

Why Check the Domlogs

If you suspect that there are traffic-based attacks being carried out against your sites, you’ll need to check the domain access logs or domlogs. Doing so will require that you are the root user and have SSH access. We will discuss the most common types of abuses that may occur, why, and how to identify them via the domlogs. If you can identify the abuse that is occurring, you can then put in place measures to counter the attack. We will also discuss the implementation of different counter-measures against these attacks.

Different types of Abuses and Common Resource Consumers to Look for include:

- wp-login bruteforcing

- xmlrpc abuse

- ‘bad’ bot traffic

- dos/ddos

- admin-ajax/wp heartbeat frequency (not a malicious abuse, but can definitely be over active and impact server resources)

- wp-cron (not exactly an abuse, but can definitely be configured to use less resources)

- calls made to trigger spam scripts

- other malicious requests

- vulnerability scans ran against your site(s)

Some reasons to check the domain access logs for abuses and RAM usage include the following:

- Out of Memory errors have been occurring on your server, or the resource usage has increased drastically (RAM, bandwidth, CPU)

- You have noticed a lot of emails coming from your sites’ scripts

- You are seeing a lot of spam being sent from the server

- You are seeing a large, unexpected increase in traffic to the site

- You suspect a hack (website compromise)

- Apache connections are hanging due to MaxRequestWorkers having been exceeded

Apache Domain Access Logs Format

First, to be able to search the domlogs effectively, you would need to understand how they’re formatted. You can search the Apache configuration on your server for “LogFormat” to see how the domain access logs are formatted. The following article is primarily cPanel-specific, but DirectAdmin commands are included in the scripts attached in the conclusion.

On a cPanel server, the LogFormat is defined in /usr/local/apache/conf/httpd.conf using the Combined Log Format with Vhost and Port as follows

LogFormat “%v:%p %h %l %u %t \”%r\” %>s %b \”%{Referer}i\” \”%{User-Agent}i\”” combinedvhost

Where each of these variables are defined as follows:

- %v – Vhost/Domain

- %p – The canonical port of the server serving the request.

- %h – Remote hostname of the client making the request. Will log the IP address if HostnameLookups is set to Off, which is the default

- %l – Identity of the client determined by identd on the clients machine (hyphen indicates that the information was not available)

- %u – userid of the person requesting the document as determined by HTTP authentication

- %t – The time that the request was received ( can be configured as desired)

- “\%r\” – The client’s request

- %>s – The Apache Status returned

- %b – The size of the request in bytes, excluding the size of the headers.

- \”%{Referer}i\” – The referer

- \”%{User-Agent}i\” – The user-agent

- ” combinedvhost – Nickname associating it with the particular log format “Combined Log Format with Vhost and Port

This log format is the same as the combined log format but with additional apache header values logged in the form of %{header}i, where the header could be any HTTP request header, but this format logs the referer and the user-agents as shown above.

When using Awk to parse the domlogs, you can reference each of these by a numerical value indicating their field in the output starting with %h. Let’s use the following domlog entry as an example:

172.68.142.138 – – [09/Mar/2019:00:38:03 -0600] “POST /wp-login.php HTTP/1.1” 404 3767 “-” “Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36 SE 2.X MetaSr 1.0”

| format specifier | awk field value | description | value from example above |

| %h | 1 | ip/hostname of the client | 172.68.142.138 |

| %l | 2 | client identity via client’s identd configuration | – |

| %u | 3 | HTTP authenticated user ID | – |

| %t | 4,5 | timestamp | [09/Mar/2019:00:38:03 -0600] |

| “\%r\” | 6,7,8 | request method of request, resource requested, & protocol | “POST /wp-login.php HTTP/1.1” |

| %>s | 9 | Apache status code | 404 |

| %b | 10 | size of request in bytes, excluding headers | 3767 |

| \”%{Referer}i\” | 11 | Referer | “-“ |

| \”%{User-Agent}i\” | 12+ | User-Agent | “Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36 SE 2.X MetaSr 1.0” |

User-Agents can take many fields. I typically find most strings identifying a bot at fields $14, and sometimes $15. The domain access logs on a cPanel server are located at /usr/local/apache/domlogs/ and more recently also at /etc/apache2/logs/domlogs/. The logs are named for the domain and the protocol used for the request. For example, the domain ‘mydemodomain.tld’ would be located at /usr/local/apache/domlogs/mydemodomain.tld for http requests and /usr/local/apache/domlogs/mydemodomain.tld-ssl_log for https requests.

Given this information, you can use Awk field separators to retrieve the fields you want. Let’s look at an example. The domain myexampledomain.tld is using more bandwidth than normal this month and you want to figure out what requests are responsible. First, you craft a request to see what IPs are sending the most requests and what types of requests these are for the current month.

First, let’s search for the current month and year for both http and https logs:

grep -s $(date +"%b/%Y:") /usr/local/apache/domlogs/myexampledomain.tld*Let’s refine this by using Awk field separators to retrieve only the information we want:

grep -s $(date +"%b/%Y:") /usr/local/apache/domlogs/myexampledomain.tld* | awk {'print $1,$6,$7'} This will give us the client IPs, ,the type of request (GET/POST), and to what path/file (resource) the request was sent.

Now, let’s allow Linux to sort this data for us via sort, then only list each request with the same values once (uniq), but count how many times they occur (-c flag), and then sort that by the number of repititions of each request (sort -n).

grep -s $(date +"%b/%Y:") /usr/local/apache/domlogs/myexampledomain.tld* | awk {'print $1,$6,$7'} | sort | uniq -c | sort -nAt the bottom of the output, we see the following:

74651 /usr/local/apache/domlogs/mydemodomain.tld:123.45.67.890 "POST /blog/wp-login.php 31175 "-"This seems to indicate a bruteforce attack against a WordPress login. There were 74.5 thousand of these requests for this month. How much bandwidth did that use, though?

To find out, we need to write a new command parsing for this IP’s requests to the wp-login.php file and add the bytes of each request (field 10) as we go:

grep /usr/local/apache/domlogs/mydemodomain.tld | grep 123.45.67.890 | awk 'BEGIN{print "Bandwidth used: }{SUM+=$10}END{print SUM}'We know that this will result in a large number of bytes, so we may as well convert this to GB to make this easier to read:

1 byte * 1024 = 1 KB

1 KB * 1024 = 1 MB

1 MB * 1024 = 1 GB

So, we can do the following to have the output shown in GB:

grep /usr/local/apache/domlogs/mydemodomain.tld | grep 123.45.67.890 | awk 'BEGIN{print "Bandwidth used: }{SUM+=$10}END{print SUM/1024/1024/1024}'We can see now that this IP brute-forcing the wp-login.php for the current month has cost us over 2G thus far (2.16741573531181G).

We can also confirm this by multiplying the number of requests times the number of bytes since each request used the same number of bytes:

31175 * 74651 = 2327244925 bytesYou can see how being able to understand each field helps with parsing the logs to better understand your sites’ traffic. Let’s look at some types of domlog abuses and requests with excessive resource usage.

WordPress Login Bruteforcing

WordPress Login brute-forcing occurs when an attacker repeatedly attempts to authenticate via your wp-admin/ url using many different passwords. They are attempting to guess the password in use. Unfortunately, due to weak passwords and frequent password reuse, these attacks are often successful. Due to the number of guesses required for this attack to work, attackers will automate the attack using large password lists. This means that your site’s wp-admin/ URL will be bombarded with numerous requests (often several thousand!). You can see how this can cause your resource usage to skyrocket. You will occasionally see this attack coupled with xmlrpc abuse as it can be used to amplify the brute-force attack.

To identify the attack, you will need to search the domain access logs for all POST requests to the wp-login.php file. Grouping these according to IP will allow you to determine what IPs you can block in the firewall to temporarily thwart the attack (provided they are not using a large range of proxy IPs to facilitate the attack). Blocking IPs is only a temporary band-aide, though.

To effectively stop the attack, you would need to protect the WordPress login. There are many plugins that can help with this. Some may change the login URL to a unique URL that only you would know, others may rate-limit the number of login attempts, and others may block all access to the login from all but a few IPs that you specify in a whitelist. You can also password protect the WordPress login via .htaccess rules. In fact, many of these protections could be implemented in the .htaccess if without the need for security plugins if you are comfortable writing and testing your own .htaccess rules.

The command I use the identify this attack for the current day on a cPanel CentOS server running Apache is the following:

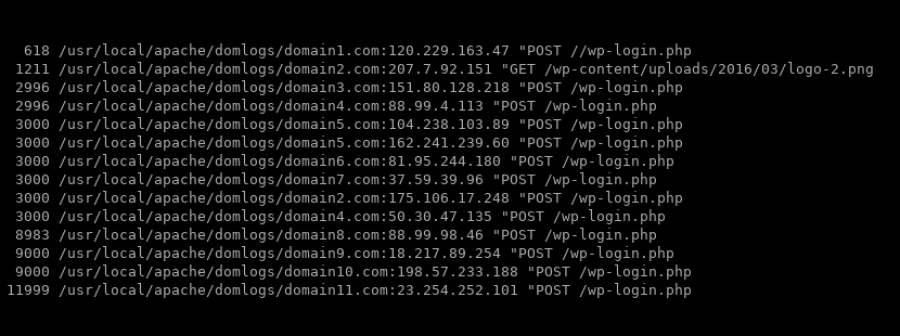

grep -s $(date +"%d/%b/%Y:") /usr/local/apache/domlogs/* | grep wp-login.php | awk {'print $1,$6,$7'} | sort | uniq -c | sort -nThis will output a nice list of the frequency of the IPs hitting which sites’ wp-login.php via what request method (POST/GET) for the current day formatted similar to the following:

You can see that the IPs responsible for the most attempts at brute-forcing your WordPress login are those listed at the bottom. You can then block these IPs accordingly* until you can implement more effective protection against this type of abuse such as a website application firewall.

XMLRPC Attacks

Another very common type of abuse we often see is the XMLRPC attack. XMLRPC is used in WordPress to transmit XML data via HTTP to different systems. XMLRPC is being replaced with the WP API, though WordPress will continue to support it for some time due to backward compatibility, so you should still be checking for this type of attack. The XMLRPC file can be used to amplify brute-force attacks so that you only see a single request in the domlogs, but that single request contains many bruteforce requests. This is done through the use of the XMLRPC system.multicall method to execute multiple methods inside a single request. This is one reason why the XMLRPC is a popular attack vector.

To stop/prevent XMLRPC attacks, you need to protect the xmlrpc.php file. There are a few considerations with this, though. First of all, some plugins rely heavily on xmlrpc,. Once such popular plugin is Jetpack. So, first you need to decide if you use XMLRPC on your site. If you do not, great! Then that is really easy. Just block access to XMLRPC via .htaccess:

<Files xmlrpc.php>

ErrorDocument 403 default

order deny,allow

deny from all

</Files>If you do use Jetpack, you could block access conditionally depending on whether the IP is Jetpack’s IP or not:

<Files xmlrpc.php>

ErrorDocument 403 default

order deny,allow

allow from 185.64.140.0/22

allow from 2a04:fa80::/29

allow from 2620:115:C000::/44

allow from 76.74.255.0/25

allow from 76.74.248.128/25

allow from 198.181.116.0/22

allow from 192.0.64.0/18

allow from 64.34.206.0/24

deny from all

</Files>Another option would be to continue to allow access if you are using Jetpack, but enable their Protect module, which claims to protect from this attack.

Alternative options include Mod_Security, WAFs like Sucuri’s that block system.multicall requests, or security plugins that offer protections.

If you choose to use .htaccess rules to block this attack, you will still find that automated attacks will continue to for some time afterward, filling thedomain access logs with “client denied by server configuration” errors. You can limit the work Apache is doing filtering and blocking these requests by utilizing the LF_APACHE_403 setting in CSF/LFD firewall to block an IP after it has exceeded a specified limit of 403 requests within the specified time frame. This setting should be high and you should account for bots in your firewall’s whitelist and ignore files if you choose to use this option.

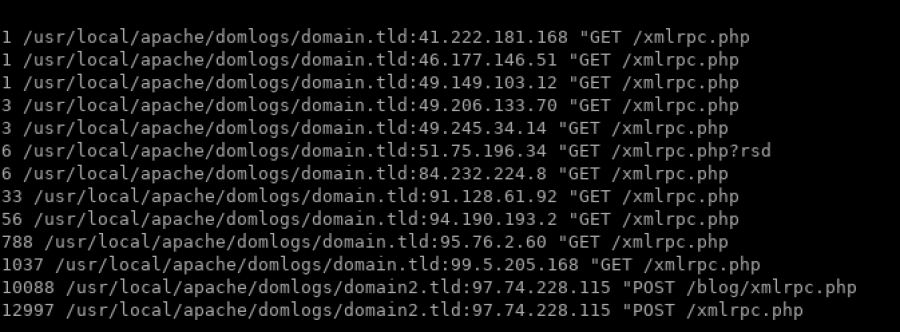

To identify this type of attack in the domain access logs, you simply need to look for POST requests to xmlrpc.php file within the suspected time frame and sort the data in a readable format. I use the following command to identify whether any XMLRPC attack has occurred for the current day in a cPanel/CentOS server running Apache:

grep -s $(date +"%d/%b/%Y:") /usr/local/apache/domlogs/* | grep xmlrpc | awk {'print $1,$6,$7'} | sort | uniq -c | sort -nThis will list output showing the number of requests that each IP is responsible for and to what site. You may be able to temporarily block IPs based on this output and then enable protection on the xmlrpc.php file for more permanent resolution.

Bot Traffic

There are two types of bots as far as most admins are concerned: bad bots and good bots. Good bots are great. They are responsible for indexing your site, which is directly correlated to your site’s SEO, and responsible for driving traffic from search results to the indexed site. They may crawl a bit more than you have resources for, though. Luckily, you can request that they crawl less via the robots.txt file. The robots.txt file is a useful tool and I recommend that you familiarize yourself with it and how to use it. You can use it to request that bots ignore and not index sensitive content (paid content, files containing keys, codes, or configurations that you don’t want anyone unauthorized to have access to, for example).

To limit how often a bot crawls your site, you can define a crawl delay in your robots.txt file for all bots. The following contents of the robots.txt file would limit all bots to crawling every 3 seconds:

User-agent: *

Crawl-delay: 3Unfortunately, not all bots obey these robots.txt file requests. These are often termed ‘bad’ bots. When you are being bombarded by ‘bad’ bot traffic, you can block those ‘bad’ bots via .htaccess. The following is an .htaccess rule that blocks bots that many have deemed ‘bad’:

RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} ^.*(Ahrefs|MJ12bot|Seznam|Baiduspider|Yandex|SemrushBot|DotBot|spbot).*$ [NC]

RewriteRule .* - [F,L]To find how often a bot is hitting your sites for the current day, you can use the following command:

grep -s $(date +"%d/%b/%Y:") /usr/local/apache/domlogs/* | grep "bot\|spider\|crawl" | awk {'print $6,$14'} | sort | uniq -c | sort -n | tail -25If you have a lot of sites on your server, and a lot of bot traffic, you may want to start by editing the .htaccess or robots.txt file for those with the most bot traffic first so that you have the most impact on resources with the least amount of effort. You can use the following command to search for common bots and see which sites are being hit by them the most (if you are seeing a lot of bots not listed in this command from the previous command to find bot traffic, then be sure to add them to the search command below):

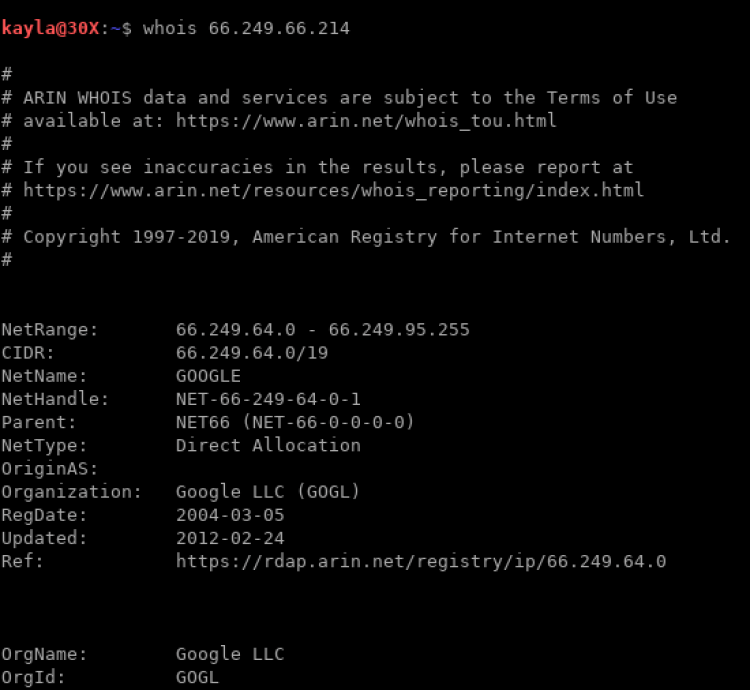

grep -s $(date +"%d/%b/%Y:") /usr/local/apache/domlogs/* | grep -i "BLEXBot\|Ahrefs\|MJ12bot\|SemrushBot\|Baiduspider\|Yandex\|Seznam\|DotBot\|spbot\|GrapeshotCrawler\|NetSeer"| awk {'print $1'} | cut -d: -f1| sort | uniq -c | sort -nNow, before you end up blocking Googlebot because you believe it to be hitting your sites 10 thousand times in one day, make sure that the IPs associated with the bot actually belong to Google. Malicious actors will spoof their user agents to make them seem like a respected bot, such as Googlebot, Yahoo Slurp, Bingbot, etc. To make sure that they actually are IPs that belong to bots that you are about to block/limit, you can search the bot in the domlogs to get the IPs these requests are originating from, and then run a WHOIS on the IP. For example, I found some requests from a Googlebot bot on one site. To determine whether these requests were from Googlebot, I needed to get a list of the IPs making these requests, and then run a WHOIS on them. The following command will generate a list of IPs in order of frequency:

grep -s $(date +"%d/%b/%Y:") /usr/local/apache/domlogs/* | grep bot | grep -i googlebot | awk {'print $1'} | cut -d: -f2 | sort | uniq -c |sort -nNow that you have the list of IPs, for each IP, run the WHOIS command (replace xxx.xxx.xxx.xxx with the actual IP):

whois xxx.xxx.xxx.xxxFor the sake of demonstration, let’s say you find the following results when searching for a particular bot user-agent:

23 66.249.66.214

23 66.249.66.92

25 66.249.66.218

25 66.249.66.79

30 66.249.66.77

35 66.249.66.78

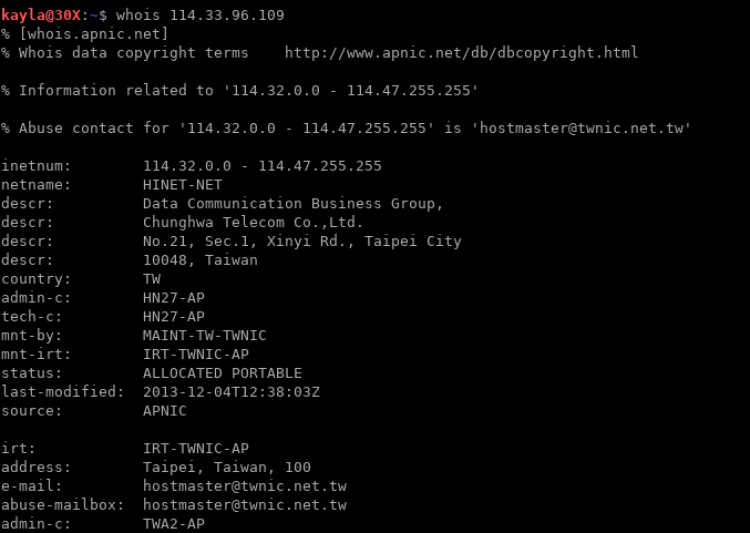

2999 114.33.96.109Running a WHOIS on the IPs in the range 66.249.66.* results in something like the following (screenshot truncated for the sake of brevity):

However, what about that last IP that is not like the others?

That IP doesn’t appear to belong to Google and the user at that address likely spoofed the user agent, which potentially implies malicious intent. Coupled with the number of requests sent on this particularly low-traffic site, I would go ahead and block this IP.

Unfortunately, there is no long-term solution for blocking spoofed user agents pretending to be bots that I am aware of. This is why it is important to be able to monitor your traffic if you notice any unusual spikes.

DoS/DDoS

To identify a DoS (Denial of Service), you would just note a lot of requests coming from a single IP or relatively few IPs causing services to overload and deny legitimate requests. If a single, or a few single IPs are responsible, then this would be a DoS. A DDoS is more difficult to identify because the requests are distributed over many IPs. You would see a dramatic, sudden, and relentless increase in traffic to a site or IP from many different IPs with a DDoS.

Combating a DoS is easy. You would just block the responsible IP(s). A DDoS is a bit more complicated. Traffic will need more advanced filtering, such as “I’m Under Attack mode” which is offered by Cloudflare. If you choose to use Cloudflare, remember to whitelist their IPs in the server’s firewall and to install Mod_Cloudflare so that the actual visitors’ IPs are logged in the Apache log rather than Cloudflare’s. Cloudflare is one of the most recommended services for protecting against DoS attacks. They offer a free introduction plan and, in addition to the DoS protection, you benefit from their global CDN and many other features.

One may also consider Apache modules that are designed to help mitigate traffic-based attacks. Two such modules are mod_evasive and mod_reqtimeout, both of which cPanel provides documentation for configuring with the cPanel Apache server.

Yet another option would be the The ModSecurity Guardian Log. ModSecurity has the added benefit of acting as a Web Application Firewall and protecting all sites on the server simultaneously against application-level attacks, such as the XMRPC attack, for example.

Remember that with KnownHost hosting, you don’t have to worry about the following types of DDoS attacks thanks to our complimentary DDoS protection:

- UDP Floods

- Syn Flood

- NTP Amplification

- Volume Based Attacks

- DNS Amplification

- Fragmented Packet Attacks

You do, however, need to prepare yourself for Layer 7 and application-layer attacks such as spam and low-level traffic floods as well as application level attacks such as XMLRPC and bruteforcing. We’ve already mentioned methods to protect against DoS and DDoS attacks, as well as using ModSecurity for protecting at the site level. One can also use WAFs developed specifically for their chosen applications, such as a security plugin for WordPress, for example.

As for protection from incoming spam floods, one could use a myriad of cPanel features such as the following:

- Custom RBLs

- Greylisting

- SpamAssassin

- BoxTrapper

- Mail Filters

- BoxTrapper

- Blackhole Emails Sent to Non-Existent/Default Email Accounts During the Attack

WordPress Heartbeat API

The WordPress Heartbeat API uses the file /wp-admin/admin-ajax.php to continuously send requests every 15-60 seconds from the browser to the server to manage WordPress admin dashboard functions such as autosave, revision logging, file locking, and session management. It does this as long as the dashboard is open. Normally this doesn’t pose much of a problem for most users, however, the usage becomes more obvious when 3rd-party plugin/theme developers begin to use the admin-ajax.php file to make their plugins more dynamic in real-time. For sites that have many plugins and themes, and especially if any of those are heavy admin-ajax.php users, this can result in high CPU utilization.

You can detect this in the domain access logs by looking for POST requests to the wp-admin/admin-ajax.php file. You can use the following query to check for this for the current date:

grep -s $(date +"%d/%b/%Y:") /usr/local/apache/domlogs/* | grep admin-ajax | awk {'print $1,$6,$7'} | sort | uniq -c | sort -n If you see that this is occurring, you can utilize the Heartbeat Control Plugin to either completely disable or limit the WordPress Heartbeat API’s normal behavior. Even with this plugin, you may still need to do more. Your installed plugins or themes may be calling the admin-ajax.php file as well. If you continue to see high heartbeat usage after installing the Heartbeat Control Plugin, you’ll want to identify the plugin or theme responsible, reach out to the developers, and see if there is any way that its usage can be limited.

There is no easy way to find the culprit. One method would be to disable all plugins and themes and slowly re-enable them one by one and reload the site for each re-enabled plugin while simultaneously tailing the domain access log and grepping for admin-ajax requests. You would need to note the admin-ajax.php POST requests that occur for each plugin/theme to determine which is responsible for the most utilization and CPU consumption. You could use this command to watch the domain access logs for only admin-ajax.php POST requests ( if you are wanting to check for a certain site, replace the asterisk with the domain name followed by an asterisk so that you see requests for both http and https versions of the site):

tail -f /usr/local/apache/domlogs/* | grep admin-ajaxThis method is slow and tedious, though. A better method would be like those discussed below since they will let you examine the POST request thoroughly and determine what code called it.

Another method would be to use a tool to view all requests on each page load in a graphical GUI in the browser, such as GTMetrix. GTMetrix will analyze the site and generate a report of all requests under the Waterfall tab. Clicking this, and then clicking the plus sign next to the “POST admin-ajax.php” requests listed in the waterfall will allow you to view more information about the requests. After you click the POST wp-admin/admin-ajax.php request link, you will want to click the “Post” tab to view information about the POST request sent. This may list information that will allow you to determine which plugin/theme is responsible for these requests. Similar tools include Pingdom Website Speed Test, WebPageTest and Gift of Speed.

Another obvious tool that could assist with finding the responsible plugin/theme would your browser’s developer tools. You would want to use the Network feature to examine the POST requests sent on each page load/reload to try to determine the origin of the request.

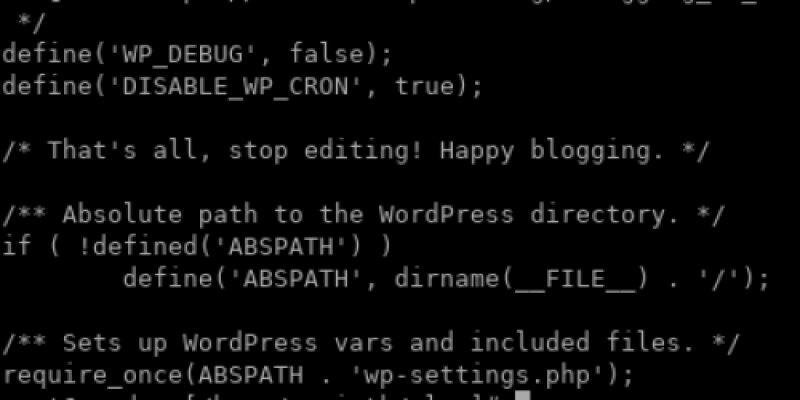

WordPress Cron

WordPress cron isn’t a ‘real’ cron. It is a PHP script that is executed on each page visit. This is great for some shared hosting environments where a user may not have access to a real Linux crontab however, it presents a few different problems and thus should be reconfigured for those who do have access to the crontab. First of all, for high-traffic sites, the wp-cron.php file being called for every single page load can have a major performance impact. Secondly, for low-traffic sites, this can result in tasks not running as often as they should. To determine how often the wp-cron has ran in the current day, you can use the following command:

grep -s $(date +"%d/%b/%Y:") /usr/local/apache/domlogs/* | grep wp-cron.php | awk {'print $1,$6,$7'} | sort | uniq -c | sort -n To disable the wp-cron.php script’s execution on every page load, open the wp-config.php file in your main WordPress folder and add the following just after the WP_DEBUG definition and just before the “/* That’s all, stop editing! Happy blogging. */” line:

define('DISABLE_WP_CRON', true);

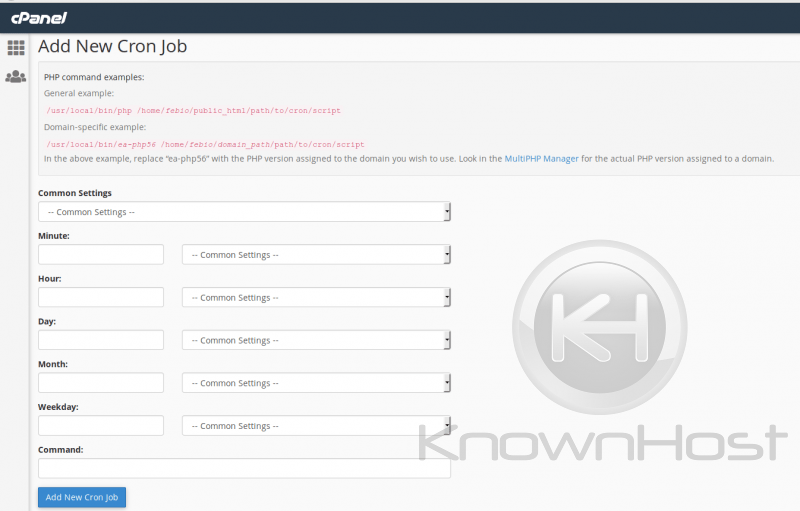

Next, setup a real cron job to execute the wp-cron.php. How often you set it to execute is really dependent on the site. You could check the contents of the cron in the wp_options table to determine the smallest time frame between scheduled tasks and then run the cron that often. A default WordPress blog with low traffic should be fine with the cron running every 15 minutes to an hour. You can adjust as you see fit.

You can set your cron to use wget, curl, or php. The php command would be the best command since curl and wget must be sent across the network. Thus, by using php, you increase reliability and eliminate whatever network latency you may have otherwise encountered (routing, filtering, dns errors, etc.,). You can use cPanel to add the cron, or you can edit the crontab using ‘crontab -e’ or your favorite editor (/var/spool/cron/USERNAME).

/usr/bin/php -q /home/USERNAME/public_html/wp-cron.php >/dev/null 2>&1cPanel has a very helpful interface for configuring cronjobs if you are unfamiliar with the syntax.

You do not want to start 50 wp-cron.php scripts all at the same time and cause Out of Memory errors on your server, so be mindful of this!

Spam Scripts

We’ve already discussed incoming spam briefly as it pertains to DoS attacks. What about outgoing spam? CSF/LFD, which KnownHost provisions our server with by default, is excellent for notifying you of any spam-related activity. Spam scripts may be uploaded via exploit to the sites on the server, and then triggered via POST requests to send spam messages. In this case, you would look for a large number of spam emails in queue, bounce-backs from these emails, RBL listings, and/or notifications from LFD and/or cPanel.

One of the most reliable methods to find the source of a spam script is to check the domlogs for those POST requests to the script. LFD relay alerts due to spam-sending scripts will usually contain a hint as the location of the script responsible (typically a directory containing the script). This information is even contained within the subject of such emails (ex. “Script Alert for ‘/home/username/public_html/sotpie/'” for root”). You can then search the domlogs for the directory location of the script to see exactly which script in that directory is responsible.

The following prints out the site, the external IP making the request, and the script being requested in order of increasing the number of requests per IP/site:

grep "sotpie" /usr/local/apache/domlogs/username/* | grep POST | awk {'print $1,$7'} | sort | uniq -c | sort -nLet’s say that you dot not have the LFD alert to let you know from where the script is located on the server. You could search first for the location of any scripts responsible for sending email via the Exim mainlog instead. You could use this:

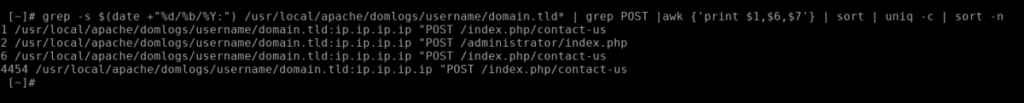

grep "cwd=" /var/log/exim_mainlog | awk -F"cwd=" '{print $2}' | awk '{print $1}' | sort | uniq -c | sort -n Or, you could just search for POST requests for the current day via the domlogs, and then exclude any common POST requests:

grep -s $(date +"%d/%b/%Y:") /usr/local/apache/domlogs/* | grep POST | grep -vi "wp-cron\|admin-ajax\|xmlrpc\|wp-config" | awk {'print $1,$7'} | sort | uniq -c | sort -nYou will likely have to further filter the output, but you will see what types of POST requests are occurring for your sites and be able to determine if any scripts are being called heavily, indicating the spam script source.

There are many ways to protect against this type of abuse. It really just comes down to securing your sites thoroughly so that no malicious scripts can be uploaded and so that no legitimate functionality can be abused. An example of a legitimate script being abused would be a contact form being used to send spam. Just checking the Exim mainlog for mail-sending scripts and their frequency and POST requests logged in the domlogs could confirm that this is occurring.

grep -s $(date +"%d/%b/%Y:") /usr/local/apache/domlogs/username/domain.tld* | grep POST |awk {'print $1,$6,$7'} | sort | uniq -c | sort -nYou may see results like this:

Adding reCaptcha and blocking the responsible IP (provided the attacker was using the same IP and not a myriad of proxy IPs) should be quite effective at mitigating this attack.

Malicious Requests

Looking for other types of malicious requests is not as easy. You have to have an idea of what you are looking for. A WAF like ModSecurity is great for filtering and blocking these types of requests, however, it does have false positives and will require your active involvement in configuring it properly overtime to work ideally with your sites (since sites will often use requests that would be flagged legitimately, and thus these ModSecurity rules would need to be adjusted/disabled).

I typically start searching the domlogs for the following:

- pastebin

- wget

- tmp

- /etc/passwd

- ../../wp-config

- root

- python

- UNION

- ‘ OR 1=1

- %27+OR+1%3D1

- <?php system

- passthru($_GET[‘

- base64

- %3Cscript%3Ealert

- ?cmd=

- <?php exec

- eval(

- =http

- unlink

- symlink

- syms

These will often show a myriad of requests that were likely initiated as exploitation attempts, including XSS, LFI, RFI, RCE, Symlink attacks, open redirects, path traversal attacks, SQLi, and Apache log poisoning. You will want to review the Apache status codes returned with the results so that you can attempt to determine if any potential attacks were successful.

Vulnerability Scanning

Exploit scanners will scan for the existence of certain files, such as previously exploited sites that still have a backdoor. You will see many requests searching for popular names for backdoors, such as shell.php, ak47.php, ak48.php, lol.php, b374k.php, cmd.php, system.php, 1.php, zzz.php, qq.php, hack.php, etc. This list is long. This is why features that allow you to block IPs that have exceeded a large number of 404 errors in a given time exist and can be helpful, as these requests can be from an automated vulnerability scanner. These could also be from a bot indexing your site, so unless you know you are under this attack, or you are certain that your permalinks are configured well and that you have no broken links on your site, you should be careful with this setting. It should be set quite high, at least 200 or more per hour. CSF/LFD offers this setting via LF_APACHE_404 and LF_APACHE_404_PERM in the /etc/csf/csf.conf file.

Conclusion

Armed with knowledge regarding the structure of the domain access logs, how to parse these logs, and what types of traffic to look for, you can now analyze your traffic to limit resource usage, combat malicious traffic/attacks, and customize the way you view your sites’ traffic. Below are a few quick bash scripts that you can run to get a quick overview of your system’s traffic. These should be ran as the root user.

For Apache on CentOS cPanel servers, you can use this:

#!/usr/bin/env bash

echo ==========================================================================================

echo Checking for WP-Login Bruteforcing:

echo ==========================================================================================

grep -s $(date +"%d/%b/%Y:") /usr/local/apache/domlogs/* | grep wp-login.php | awk {'print $1,$6,$7'} | sort | uniq -c | sort -n | tail -10

echo ==========================================================================================

echo Checking for XMLRPC Abuse:

echo ==========================================================================================

grep -s $(date +"%d/%b/%Y:") /usr/local/apache/domlogs/* | grep xmlrpc | awk {'print $1,$6,$7'} | sort | uniq -c | sort -n | tail -10

echo ==========================================================================================

echo Checking for Bot Traffic:

echo ==========================================================================================

grep -s $(date +"%d/%b/%Y:") /usr/local/apache/domlogs/* | grep "bot\|spider\|crawl" | awk {'print $6,$14'} | sort | uniq -c | sort -n | tail

-15

echo ==========================================================================================

echo Checking the Top Hits Per Site Per IP:

echo ==========================================================================================

grep -s $(date +"%d/%b/%Y:") /usr/local/apache/domlogs/* | awk {'print $1,$6,$7'} | sort | uniq -c | sort -n | tail -15

echo ==========================================================================================

echo Checking the IPs that Have Hit the Server Most and What Site:

echo ==========================================================================================

grep -s $(date +"%d/%b/%Y:") /usr/local/apache/domlogs/* | awk {'print $1'} | sort | uniq -c | sort -n | tail -25

echo ==========================================================================================

echoFor the cPanel plugin NginxCP , you can use this:

#!/usr/bin/env bash

echo ==========================================================================================

echo Checking for WP-Login Bruteforcing:

echo ==========================================================================================

grep -s $(date +"%d/%b/%Y:") /var/log/nginx/microcache.log | grep wp-login.php | awk {'print $1,$6,$7'} | sort | uniq -c | sort -n | tail -10

echo ==========================================================================================

echo Checking for XMLRPC Abuse:

echo ==========================================================================================

grep -s $(date +"%d/%b/%Y:") /var/log/nginx/microcache.log | grep xmlrpc | awk {'print $1,$6,$7'} | sort | uniq -c | sort -n | tail -10

echo ==========================================================================================

echo Checking for Bot Traffic:

echo ==========================================================================================

grep -s $(date +"%d/%b/%Y:") /var/log/nginx/microcache.log | grep "bot\|spider\|crawl" | awk {'print $6,$14'} | sort | uniq -c | sort -n | tail -15

echo ==========================================================================================

echo Checking the Top Hits Per Site Per IP:

echo ==========================================================================================

grep -s $(date +"%d/%b/%Y:") /var/log/nginx/microcache.log | awk {'print $1,$6,$7'} | sort | uniq -c | sort -n | tail -15

echo ==========================================================================================

echo Checking the IPs that Have Hit the Server Most and What Site:

echo ==========================================================================================

grep -s $(date +"%d/%b/%Y:") /var/log/nginx/microcache.log | awk {'print $1'} | sort | uniq -c | sort -n | tail -25

echo ==========================================================================================

echoIf you need to gather traffic data on a cPanel server for a specific date, you can use this and enter the date when prompted:

#!/usr/bin/env bash

echo 'Enter date (e.g. 01/Jan/2019): '

read date

echo ==========================================================================================

echo Checking for WP-Login Bruteforcing:

echo ==========================================================================================

grep -s $date /usr/local/apache/domlogs/* | grep wp-login.php | awk {'print $1,$6,$7'} | sort | uniq -c | sort -n | tail -20

echo ==========================================================================================

echo Checking for XMLRPC Abuse:

echo ==========================================================================================

grep -s $date /usr/local/apache/domlogs/* | grep xmlrpc | awk {'print $1,$6,$7'} | sort | uniq -c | sort -n | tail -20

echo ==========================================================================================

echo Checking for Overly Active WP Heartbeat aka admin-ajax

echo ==========================================================================================

grep -s $date /usr/local/apache/domlogs/* | grep admin-ajax | awk {'print $1,$6,$7'} | sort | uniq -c | sort -n | tail -20

echo ==========================================================================================

echo Checking for Bot Traffic:

echo ==========================================================================================

grep -s $date /usr/local/apache/domlogs/* | grep "bot\|spider\|crawl" | awk {'print $6,$14'} | sort | uniq -c | sort -n | tail -25

echo ==========================================================================================

echo Checking for Sites Most Abused by Bots:

echo ==========================================================================================

grep -s $date /usr/local/apache/domlogs/* | grep -i "BLEXBot\|Ahrefs\|MJ12bot\|SemrushBot\|Baiduspider\|Yandex\|Seznam\|DotBot\|spbot\|GrapeshotCrawler\|NetSeer"| awk {'print $1'} | cut -d: -f1| sort | uniq -c | sort -n

echo ==========================================================================================

echo Checking the Top Hits Per Site Per IP:

echo ==========================================================================================

grep -s $date /usr/local/apache/domlogs/* | awk {'print $1,$6,$7'} | sort | uniq -c | sort -n | tail -15

echo ==========================================================================================

echo Checking the IPs that Have Hit the Server Most and What Site:

echo ==========================================================================================

grep -s $date /usr/local/apache/domlogs/* | awk {'print $1'} | sort | uniq -c | sort -n | tail -25

echo ==========================================================================================

cP/vz55-md/17886 root@162.246.58.198 [~]#For DirectAdmin servers running Apache, you can use the following:

#!/usr/bin/env bash

echo ==========================================================================================

echo Checking for OOMs in /var/log/messages:

echo ==========================================================================================

grep -i kill /var/log/messages | grep -v sh | grep -v lfd | grep -v cpanellogd

echo ==========================================================================================

echo ==========================================================================================

echo Checking the OOM count in user_beancouters:

echo ==========================================================================================

grep oom /proc/user_beancounters | awk {'print $6'}

echo ==========================================================================================

echo ==========================================================================================

echo Checking for Unecessary Processes:

echo ==========================================================================================

ps auxf | grep -i solr

ps auxf | grep -i clamd

echo ==========================================================================================

echo ==========================================================================================

echo Checking for LFD alerts

echo ==========================================================================================

grep lfd /var/log/exim_mainlog

echo ==========================================================================================

echo ==========================================================================================

echo Checking for failed service notifications:

echo ==========================================================================================

grep "FAIL\|RECOVER" /var/log/exim_mainlog

echo ==========================================================================================

echo ==========================================================================================

echo Checking for WP-Login Bruteforcing:

echo ==========================================================================================

grep -s $(date +"%d/%b/%Y:") /var/log/httpd/domains/* | grep wp-login.php | awk {'print $1,$6,$7'} | sort | uniq -c | sort -n | tail -20

echo ==========================================================================================

echo Checking for XMLRPC Abuse:

echo ==========================================================================================

grep -s $(date +"%d/%b/%Y:") /var/log/httpd/domains/* | grep xmlrpc | awk {'print $1,$6,$7'} | sort | uniq -c | sort -n | tail -20

echo ==========================================================================================

echo Checking for Overly Active WP Heartbeat aka admin-ajax

echo ==========================================================================================

grep -s $(date +"%d/%b/%Y:") /var/log/httpd/domains/* | grep admin-ajax | awk {'print $1,$6,$7'} | sort | uniq -c | sort -n | tail -20

echo ==========================================================================================

echo Checking for Bot Traffic:

echo ==========================================================================================

grep -s $(date +"%d/%b/%Y:") /var/log/httpd/domains/* | grep "bot\|spider\|crawl" | awk {'print $6,$14'} | sort | uniq -c | sort -n | tail -25

echo ==========================================================================================

echo Checking for Sites Most Abused by Bots:

echo ==========================================================================================

grep -s $(date +"%d/%b/%Y:") /var/log/httpd/domains/* | grep -i "BLEXBot\|Ahrefs\|MJ12bot\|SemrushBot\|Baiduspider\|Yandex\|Seznam\|DotBot\|spbot\|GrapeshotCrawler\|NetSeer"| awk {'print $1'} | cut -d: -f1| sort | uniq -c | sort -n

echo ==========================================================================================

echo Checking the Top Hits Per Site Per IP:

echo ==========================================================================================

grep -s $(date +"%d/%b/%Y:") /var/log/httpd/domains/* | awk {'print $1,$6,$7'} | sort | uniq -c | sort -n | tail -15

echo ==========================================================================================

echo Checking the IPs that Have Hit the Server Most and What Site:

echo ==========================================================================================

grep -s $(date +"%d/%b/%Y:") /var/log/httpd/domains/* | awk {'print $1'} | sort | uniq -c | sort -n | tail -25

echo ==========================================================================================

echoFor DirectAdmin when you need to specify the date to check the traffic for:

#!/usr/bin/env bash

echo 'Enter date (e.g. 01/Jan/2019): '

read date

echo ==========================================================================================

echo Checking for WP-Login Bruteforcing:

echo ==========================================================================================

grep -s $date /var/log/httpd/domains/* | grep wp-login.php | awk {'print $1,$6,$7'} | sort | uniq -c | sort -n | tail -20

echo ==========================================================================================

echo Checking for XMLRPC Abuse:

echo ==========================================================================================

grep -s $date /var/log/httpd/domains/* | grep xmlrpc | awk {'print $1,$6,$7'} | sort | uniq -c | sort -n | tail -20

echo ==========================================================================================

echo Checking for Overly Active WP Heartbeat aka admin-ajax

echo ==========================================================================================

grep -s $date /var/log/httpd/domains/* | grep admin-ajax | awk {'print $1,$6,$7'} | sort | uniq -c | sort -n | tail -20

echo ==========================================================================================

echo Checking for Bot Traffic:

echo ==========================================================================================

grep -s $date /var/log/httpd/domains/* | grep "bot\|spider\|crawl" | awk {'print $6,$14'} | sort | uniq -c | sort -n | tail -25

echo ==========================================================================================

echo Checking for Sites Most Abused by Bots:

echo ==========================================================================================

grep -s $date /var/log/httpd/domains/* | grep -i "BLEXBot\|Ahrefs\|MJ12bot\|SemrushBot\|Baiduspider\|Yandex\|Seznam\|DotBot\|spbot\|GrapeshotCrawler\|NetSeer"| awk {'print $1'} | cut -d: -f1| sort | uniq -c | sort -n

echo ==========================================================================================

echo Checking the Top Hits Per Site Per IP:

echo ==========================================================================================

grep -s $date /var/log/httpd/domains/* | awk {'print $1,$6,$7'} | sort | uniq -c | sort -n | tail -15

echo ==========================================================================================

echo Checking the IPs that Have Hit the Server Most and What Site:

echo ==========================================================================================

grep -s $date /var/log/httpd/domains/* | awk {'print $1'} | sort | uniq -c | sort -n | tail -25

echo ==========================================================================================